- Posted on : March 24, 2025

-

- Industry : Corporate

- Tech Focus : Ignis

- Type: Blog

Exploring Azure AI Foundry: A Deep Dive into Low-Code/No-Code AI Development

Low-code and no-code platforms have revolutionized the Al development landscape by lowering the technical barrier for users with limited programming expertise. To harness such GenAl-powered enablement and foster innovation and efficiency, we have researched some available options for low-code and no-code platforms.

We took into consideration the community reviews and popularity among the masses, then conducted research on Azure Al Foundry, Microsoft Copilot Studio, FlowiseAI, StackAI, Botpress and Langflow.

Out of these, Microsoft Azure Al Foundry stood out as a robust platform. It enables users to build Al solutions with minimal coding through intuitive visual interfaces, drag-and-drop components, and pre-built templates. In this blog, we’ll explore Azure AI Foundry’s features, strengths, and limitations, along with insights gathered from research and practical demonstrations.

Key Features

Azure Al Foundry is a cloud-based platform that integrates seamlessly with other Azure services, including Azure OpenAI, Azure Cognitive Services, and Azure AI Search, to offer a complete development environment. Key features include:

- No-code/Low-code Interface: Azure Al Foundry offers ready-to-use templates and a drag-and-drop interface for creating Al applications.

- Model Deployment: Azure Al Foundry supports deploying models as web services, containers, or code, allowing users to integrate Al models into various applications.

- Monitoring & Logging: The platform offers robust monitoring tools, enabling users to track model performance and log metrics during deployments.

- Security and Compliance: Azure Al Foundry adheres to GDPR and HIPAA standards, ensuring data security with encryption and role-based access control.

- Generative AI Capabilities: The platform facilitates the development and deployment of generative AI models. By integrating custom data, users can enhance models using the Retrieval-Augmented Generation (RAG) technique.

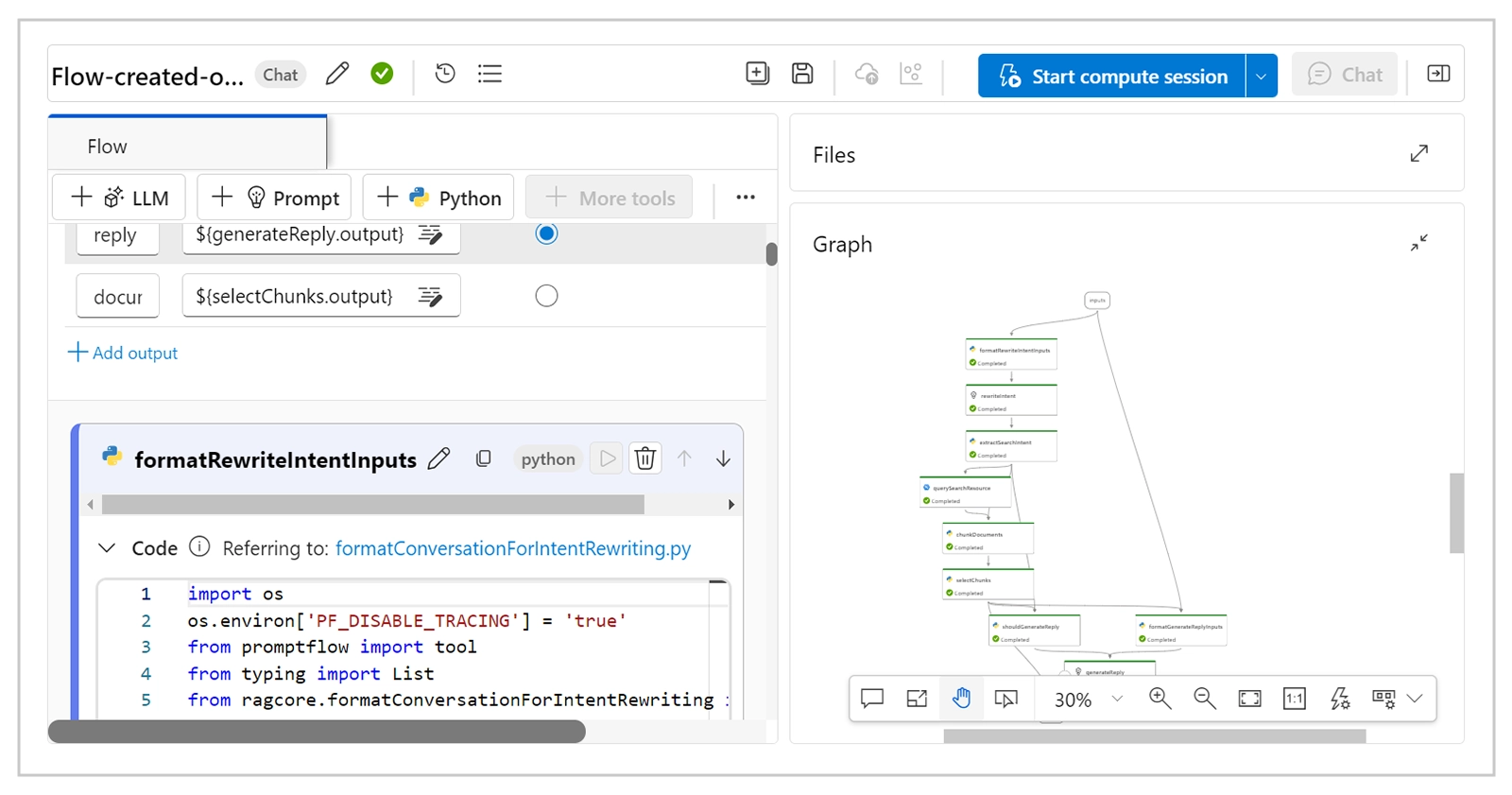

- Prompt Flow: This feature allows defining workflows that integrate AI models, prompts, and custom logic, streamlining the development process. Users can apply low-code customizations to create tailored AI solutions (Fig. 2).

Building RAG Solutions

Azure Al Foundry excels in developing Retrieval-Augmented Generation solutions. A step-by-step approach allows users to:

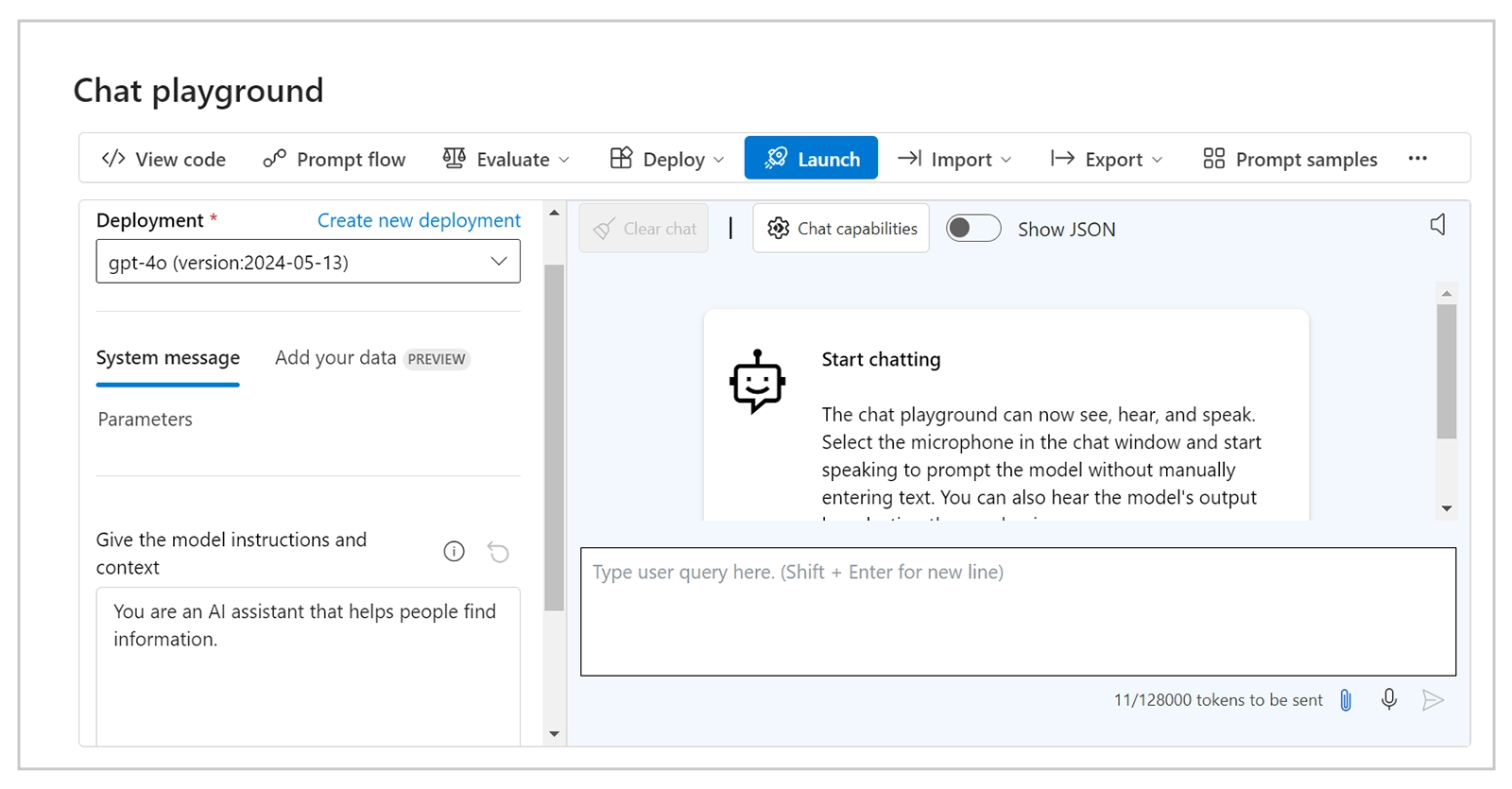

- Create a RAG Workflow by using the Chat Playground to experiment with GenAI models and incorporate data from custom sources.

- Deploy and Integrate via Azure endpoints for real-time use. This ensures scalability and performance optimization, leveraging Azure's infrastructure.

- Customize in Prompt Flow to modify and fine-tune models through workflows, enabling a tailored approach for different applications.

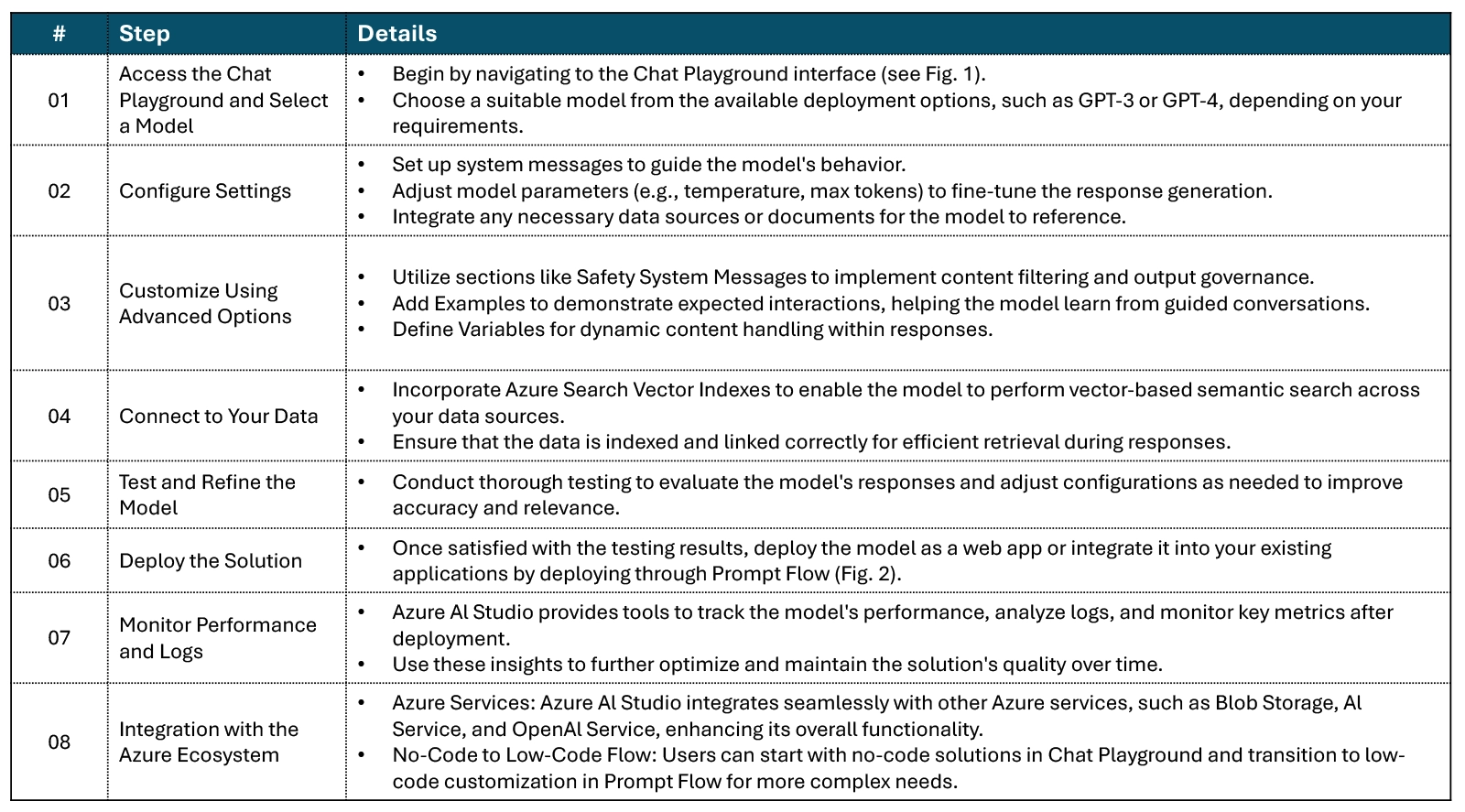

Steps to Build a RAG Solution Using Chat Playground in Azure AI Studio

Fig 1: Chat Playground Interface

Fig 2: Prompt Flow Interface

Key Takeaways and Learnings

- Hybrid Search Techniques combine keyword and semantic searches leads to more accurate results.

- Output Governance includes features that encourage model reliability, such as asking the model to "think twice" before finalizing output.

- Azure Al Foundry offers several advantages over other tools:

- Ease of Use: Its no-code/low-code functionality opens Al development to a wider audience, from business users to technical experts.

- Scalability: Azure's infrastructure ensures that models can be scaled to handle large datasets and high user volumes without compromising performance.

- Customizability: While the platform offers pre-built models, developers can customize workflows and models to suit specific needs.

- Integration Capabilities: Azure Al Foundry’s integration with other Azure services, such as Azure OpenAI and Azure API Management, simplifies deployment and management of applications.

- While Azure Al Foundry provides many powerful features, there are some areas where improvements can be made:

- Vendor Lock-in: Since the platform is built within the Microsoft ecosystem, migrating applications to other cloud platforms may pose compatibility issues.

- Learning Curve: Despite its user-friendly interface, mastering the platform requires a learning curve, especially when it comes to advanced Al concepts and model fine-tuning.

- Costs in Fine-Tuning: Fine-tuning pre-trained models on custom data can be complex and expensive, particularly for large-scale applications.

The Path Forward

Azure Al Foundry is a comprehensive, scalable solution for developing Al applications with minimal coding. It caters to both technical and non-technical users, enabling them to build, deploy, and manage Al models with ease. While the platform excels in flexibility and scalability, it also presents challenges like vendor lock-in and the learning curve for non-experts.

Going forward, enhancements in cross-cloud compatibility and reduced fine-tuning complexity could make Azure Al Foundry even more appealing to enterprises and developers alike.