- Posted on : February 10, 2025

-

- Industry : All

- Tech Focus : IGNIS

- Type: Blog

Azure Prompt Flow for Iterative Evolution of Prompts

Large Language Models (LLMs) are trained on internet content that is inherently probabilistic, so despite being able to produce text that is easy to believe, that text can also be incorrect, unethical, and/or prejudiced. Also, their output can vary each time, even if the inputs remain unchanged.

These errors can cause reputational or even financial damage to brands that publish it, so those brands must constantly evaluate their outputs for quality, accuracy, and slant.

There are several methods for testing output. The best for a given case depends on the case itself. At Infogain, when we need to evaluate prompt effectiveness and response quality, we use Azure Prompt Flow.

Evaluation flows can take required inputs and produce corresponding outputs, which are often the scores or metrics.

The concepts of evaluation flows differ from standard flows in the authoring experience and how they're used. Special features of evaluation flows include:

- They usually run after the run to be tested by receiving its outputs. They use the outputs to calculate the scores and metrics. The outputs of an evaluation flow are the results that measure the performance of the flow being tested.

- They may have an aggregation node that calculates the overall performance of the flow being tested over the test dataset.

As AI applications driven by LLMs gain momentum worldwide, Azure Machine Learning Prompt Flow offers a seamless solution for the development cycle from prototyping to deployment. It empowers you to:

- Visualize and Execute: Design and execute workflows by linking LLMs, prompts, and Python tools in a user-friendly, visual interface.

- Collaborate and Iterate: Easily debug, share, and refine your flows with collaborative team features, ensuring continuous improvement.

- Test at Scale: Develop multiple prompt variants and evaluate their effectiveness through large-scale testing.

- Deploy with Confidence: Launch a real-time endpoint that maximizes the potential of LLMs for your application.

We believe that Azure Machine Learning Prompt Flow is an ideal solution for developers who need an intuitive and powerful tool to streamline LLM-based AI projects.

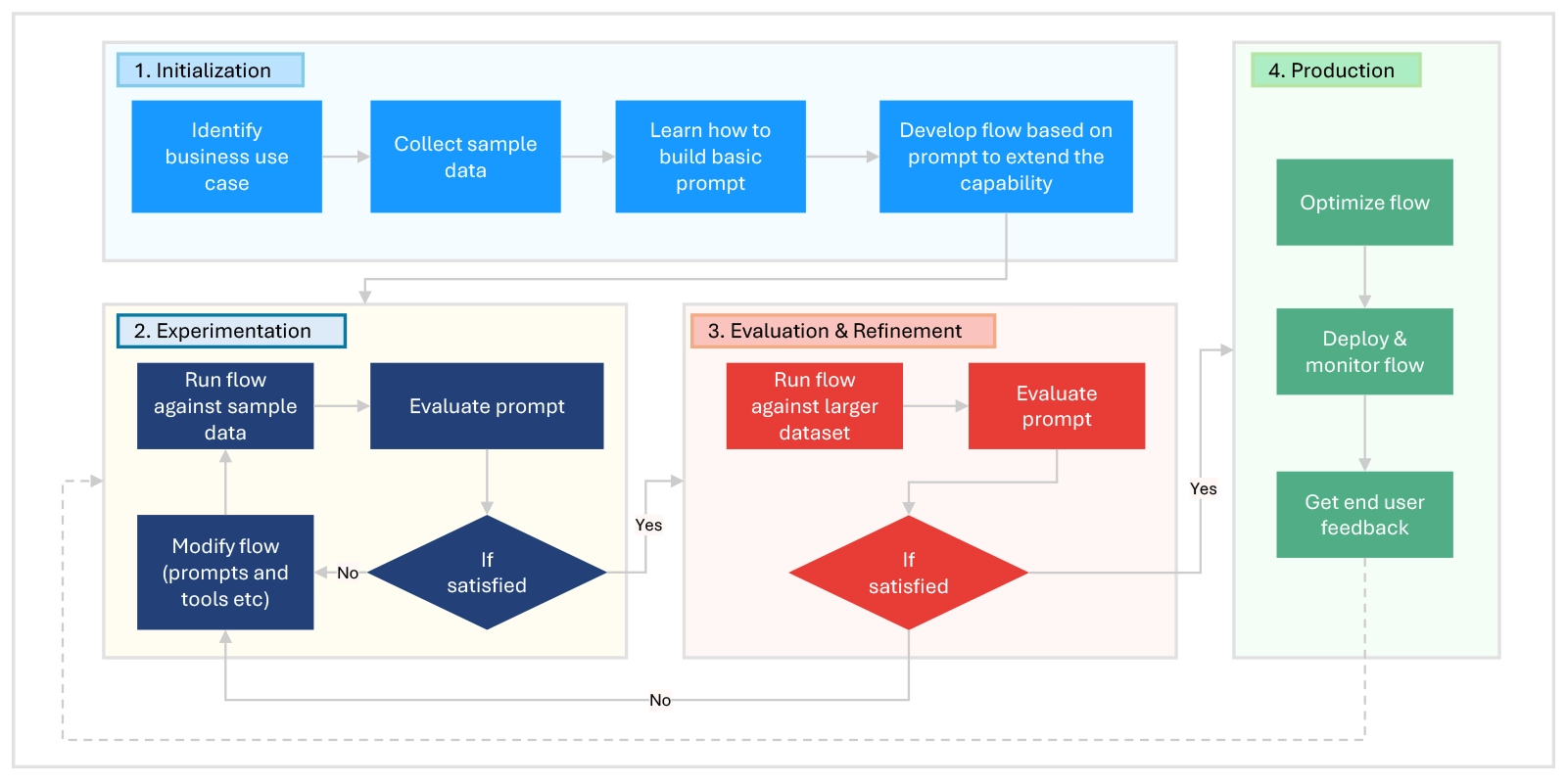

LLM-based application development lifecycle

Azure Machine Learning Prompt Flow streamlines AI application development through four main stages:

- Initialization: Identify the business use case, collect sample data, build a basic prompt, and develop an extended flow.

- Experimentation: Run the flow on sample data, evaluate and modify as needed, and iterate until satisfactory results are achieved.

- Evaluation & Refinement: Test the flow on larger datasets, evaluate performance, refine as necessary, and proceed if criteria are met.

- Production: Optimize the flow, deploy and monitor in a production environment, gather feedback, and iterate for improvements.

Figure 1: Prompt Flow Lifecycle

Example:

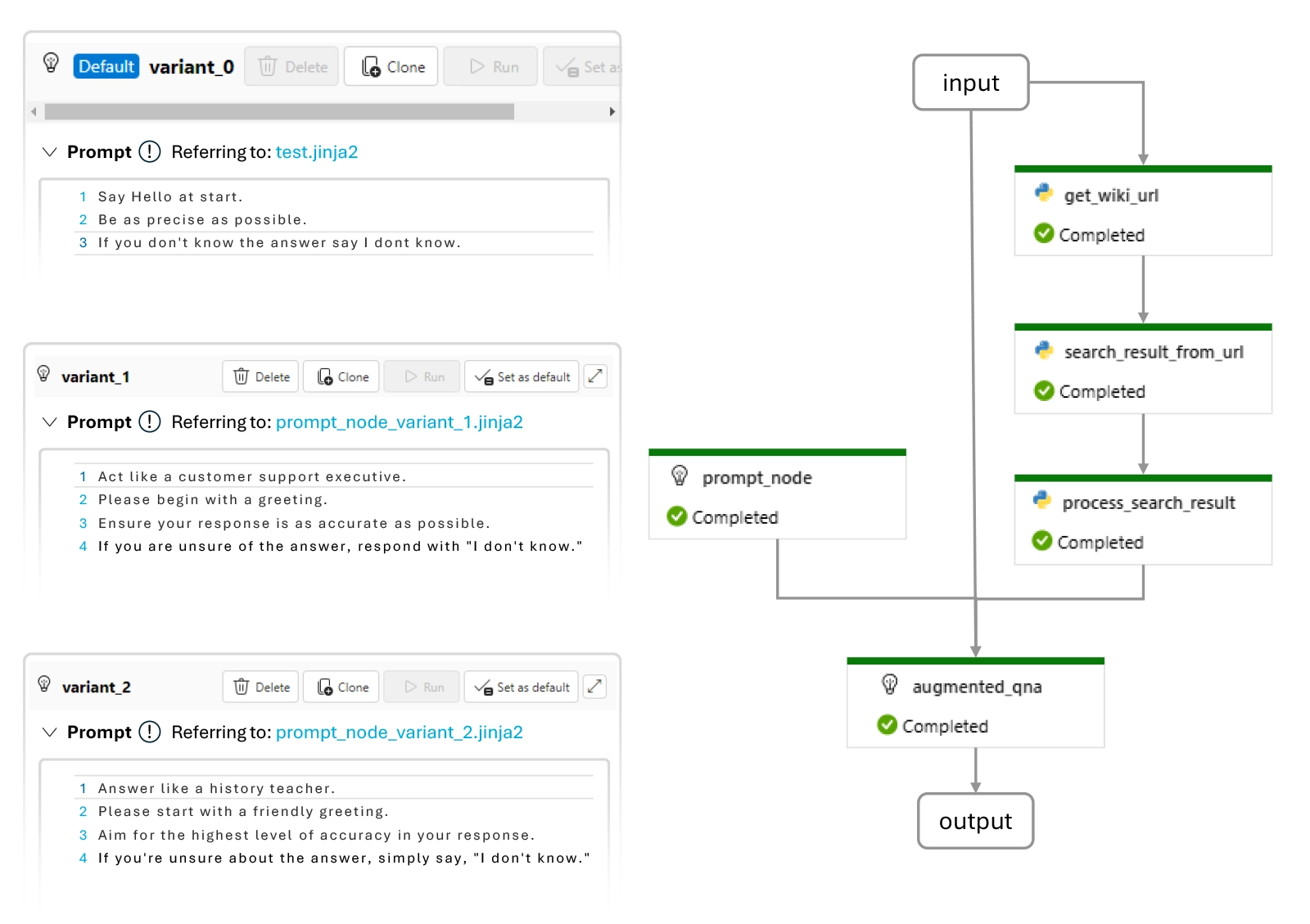

This flow demonstrates the application of Q&A using GPT, enhanced by incorporating information from Wikipedia to make the answer more grounded. This process involves searching for a relevant Wikipedia link and extracting its page contents. The Wikipedia contents serve as an augmented prompt for the GPT chat API to generate a response.

Figure 2: AskWiki Flowchart and prompt variants

Figure 2 illustrates the steps we took to link inputs to various processing stages and producing the outputs.

We utilized Azure Prompt Flow's "Ask Wiki" template and devised three distinct prompt variations for iterative testing. Each variant can be adjusted through prompt updates and fine-tuned using parameters such as temperature and deployment models within the Language Model (LLM). However, in this example, our emphasis has been solely on filtering prompts. This approach facilitated comparisons across predefined metrics like Coherence, AdaSimilarity, Fluency, and F1 score, enabling efficient development and enhancement of robust AI applications.

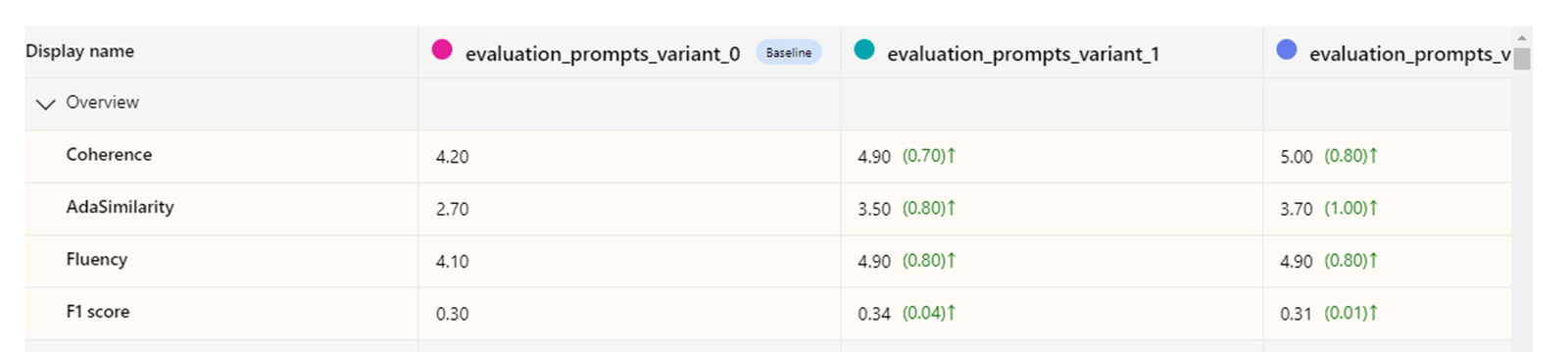

Figure 3: Output Comparison

Figure 3 shows our iterative process beginning with Variant 0, which initially yielded unsatisfactory results. Learning from this, we refined the prompts to create Variant 1, which showed incremental improvement. Building on these insights, Variant 2 was developed, exhibiting further enhancements over Variant 1. This iterative cycle involved continuous prompt updates and re-evaluation with diverse sets of Q&A data.

Through this iterative refinement and evaluation process, we aimed to identify and deploy the most effective prompts for our application. This optimized performance metrics and ensured that our AI application operates at a high standard, meeting the demands of complex user queries with precision and reliability. Each iteration brought us closer to achieving optimal performance, reflecting our commitment to excellence in AI-driven solutions.